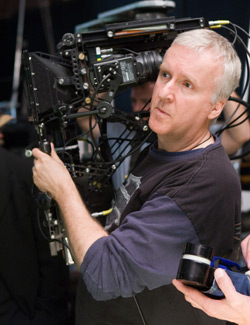

James Cameron’s Stereo-3D Epic Demanded Workflow Innovation

So that Cameron could see the CG characters’ expressions on stage, and so animators had data to work with in post-production, Weta Digital created a facial capture system. The actors wore helmets with attached cameras to capture lip movement, dots painted on the actors’ faces, and eye movement. Weta’s software translated the data and applied it to a blend-shape-based facial-animation system. The visual-effects studio also turned the video-game quality graphics from Lightstorm into lush environments and believable characters, and refined the motion-capture data.

Capturing Performances and Camera Moves

But much of the film was all CG. For that, the layout artists performed a different role. “We needed a group to handle and push data through the pipeline,” says Shawn Dunn, layout supervisor. “Layout for Avatar was very different from what we typically call layout.” Dunn began working on the project in 2006 and continued pushing data into the pipeline until the last shot finaled three-and-a-half years later.

But much of the film was all CG. For that, the layout artists performed a different role. “We needed a group to handle and push data through the pipeline,” says Shawn Dunn, layout supervisor. “Layout for Avatar was very different from what we typically call layout.” Dunn began working on the project in 2006 and continued pushing data into the pipeline until the last shot finaled three-and-a-half years later.

In addition to capturing the actors’ performances, Lightstorm and Giant also captured cameras on stage and a virtual camera – a 9-inch LCD screen surrounded by a steering wheel – that Cameron used to pan, zoom, and move within the virtual environment. “The cameras came in through FBX files,” Dunn says. “We’d move the data to our proprietary stereo camera rig and publish it in the right world space. In layout, we often work with directors to change the cameras, but we didn’t change Jim’s cameras.” Anyone in the pipeline could then see Pandora through Cameron’s eyes.

But that was only part of the layout process for this film. “Layout became the front end of the pipeline,” Dunn says. “But we also worked way into the process, pulling data in and giving it to the models, motion edit, animation and shots department.” The shots department at Weta packages together data from the animators, high-resolution geometry for characters and the environment, and simulation data, and then lights and renders the shots.

"There Are So Many Assets, It’s Unbelievable"

And such data. During the third-act battle, CG characters run through landscapes filled with 100,000 digital trees, rocks, bushes and vines. “There are so many assets, it’s unbelievable,” Dunn says.

To create these sequences and others, actors Sam Worthington (Jake), a paraplegic who inhabits a Na’vi avatar to immerse himself in Na’vi culture, and Zoë Saldana (Neytiri), a Na’vi princess, ran along shapes on the floor of a set, and Cameron filmed their CG doppelgangers running through a CG environment. Lightstorm sent Weta that environment, the camera moves, and the motion-capture data, as well as data from the HD reference cameras.

“Lightstorm would provide us with a great template with the rough environment for all the scenes, so we had a good guide to draw from,” Dunn says. “It gave us the shapes, the positions of the plants, and generally what Jim [Cameron] was looking for. If you were to look at a template and at a final shot, they would be similar. If the characters ran on a tree branch, their blocking and positions would be identical.” Similar in placement, but not in detail.

“We’d look at the template on a per-scene basis and break down the environments to tell the modelers what models we needed,” Dunn says. “This type of tree, this type of bush, some vines, branches, a ground plane. Then, we’d spend time dressing the set. Everything the characters interacted with or touched was especially important.”

He continues, “Jim would decide where he wanted to shoot within a set they built – say a forest with a stream down the middle. It was like location scouting. If he shot close to the edge of the environment, the template didn’t give us much to work with. If he shot inside, we’d have low-resolution polygonal models up close and image planes outside that with paintings on them – vine cards, fog cards, and so forth. They kept the environments as low resolution as possible to run them in real time. It was up to us to interpret them and build the high-res environment.”

Growing Massive Flora

To help speed the set-dressing process, the artists could use custom painting tools to position plants in the landscape. In addition, the set dressers used Massive software to grow the forests. Because the characters needed to move through a stereo 3D space, the crew decided not to instance plants during rendering. The plants were all 3D; modelers built each using a rule-based system. As a result, some scenes had hundreds of millions of polygons, with some individual plants having as many as one million polygons. Weta developed methods for managing all that complexity.

![]()

Recycling Program

“We had tools for tracking assets in scenes and for determining which assets were new,” Dunn says. “As the movie progressed, we would see some assets over and over, so we had systems that would look at the templates, see if we had anything already built that was valid for that set, and replace those assets with our high-resolution models. At the same time, we were building all the new ones we needed for the scenes.”

The shots department, which received the high-resolution environments from layout, employed such techniques as stochastic pruning to reduce the geometric resolution for plants in the distance, and spherical harmonics, a rendering technique used for games, which made it possible to light the rainforest.

In addition to breaking down environments for modelers and providing high-res environments to the shots department, the layout department also helped ready the motion-capture data for motion editors who would apply the data to the CG characters and send it on to the animators. “When they captured the performances, they worked in stage space,” Dunn says. “The character, their bow, what they were riding would all be captured in stage space. All that data then needed to be located in the 3D world.”

If a shot had four horses and a rider, for example, the layout artists made sure the male Na’vi was on the fourth horse, had the right weapon, and had the right arrows in his bow in the correct position in 3D space.

![]()

So Big It Breaks the Math

“The third-act battle was so huge and the characters so far from [the zero point of all three axes] in 3D space, we would get round-off errors,” Dunn says. “If we needed to run cloth sims or particle sims or even deformation on characters, we transferred them closer to origin, so motion-edit knew where to publish the data.”

“Having the right assets to interact with makes it easier for the entire pipeline,” Dunn says. “We were responsible for how information flows through animation, and we passed similar information to the shots department so everyone worked off the same tree.”

For example, the layout department provided to the simulation team assets that needed to break, water planes that needed to flow, and other information. For matte painters, they sent 3D geometry for far backgrounds; for animators, plants that moved. “We’d define which plants needed to be knocked over or broken,” Dunn says. “If a character touched a leaf or pushed it out of the way, we’d provide an asset for the animators so they could have the character interact with it.”

As filmmakers such as Cameron further mingle the worlds of live action and animation and do so in stereo 3D with great volume and complexity, studios such as Weta Digital find themselves developing new processes on the fly to encompass both worlds simultaneously. “We’ve had a layout team at Weta for a while,” Dunn says. “But we put a lot of stuff in place that was particular to this process, and we did a lot of it from scratch. It was a huge undertaking.”

Sections: Creativity

Topics: Feature Project/Case study

Did you enjoy this article? Sign up to receive the StudioDaily Fix eletter containing the latest stories, including news, videos, interviews, reviews and more.