Note: Roger Beck is an independent consultant who also supports, works with and represents companies on a day to day basis. The opinions expressed in this article are his own and he wishes to stress that the ultimate decision on storage and workflow solutions for media must be built around many factors, including usability, support and fit for purpose on a project-by-project basis.

Since it is an essential requirement for every computer, the file system has been taken for granted for a long time. There are more than 85 different choices for local file systems (at least that was the case last time I counted), including HFS(P), APFS, FAT32, NTFS, EXT4, XFS, and Btrfs to name just a few, all covering various kinds of hardware and providing a variety of features. You can find many informative articles explaining the differences in performance and limitations of local file systems, so I want to focus here on shared file systems — a whole different beast. Let’s start with a short trip into the past, to help us figure out why there are so many file systems to choose from in the first place.

Know the Past to Understand the Present – and to Be Prepared for the Future

Not that long ago, really, we were grateful for the ability to share data amongst computers at all, utilizing protocols such as Network File System (NFS) for the *nix world, the Common Internet File System (CIFS, aka Server Message Block or SMB) for Windows machines, followed by the Apple Filing Protocol (AFP, aka AppleShare) for Apple computers. Network speeds were an earth-shattering 10 Mbit then, and with a lot of money, or a little luck, you might even have had an Asynchronous Transfer Mode (ATM) based network, providing a mind-blowing speed of 155 Mbit.

Performance has multiplied dramatically since then, with 40 Gbit basically being the standard today, and 100 Gbit right on the horizon — and that’s just for the Ethernet protocol. The development was similar with Fibre Channel (FC). A then-phenomenal throughput of 1 Gbit in 1997 has jumped to 128 Gbit some 20 years later, at least with adequate switches available today from vendors such as Brocade. In addition to these, the two most popular topologies for shared file systems, there are of course InfiniBand (IB) and Serial Attached SCSI (SAS), more commonly used for server-to-server or RAID-system-to-JBOD expansion, etc.

The Big Shift

Demands on network performance and speed skyrocketed when the movie industry started the digital revolution and created more and more computer-generated (CG) imagery. From 3D CG to VFX to color correction, every step in the production and post-production process of moving pictures was suddenly generating numerous digital media files. Instead of countless video tapes piling up in the archives of post-production facilities, unstructured (or “unfiled”) media files flooded the IT servers, creating data chaos and leaving IT personnel to invest a lot of manual effort in detangling the mess.

In the early 2000s, the hype about HD 1920×1080 (and later UHD 3840×2160) formats cranked up the demands even further, as file sizes suddenly were multiplied by four, forcing IT networks to keep up with the increased workload. (What a shame that it took more than 20 years for the official introduction of HD formats — if, in the early 90s, anyone had listened to the Digital HDTV Grand Alliance, who won an Emmy Award in 1997, we would have enjoyed HDTV a lot earlier.)

With this unstoppable evolution, clustered (or shared) file systems arose. However, the first attempts to attach storage to the network (network-attached storage or NAS) turned out be a huge bottleneck in regards to performance. However, direct-attached storage (DAS) wasn’t that practical either, as data had to be duplicated and transferred to be available on other machines.

Instead, storage area networks (SANs) were employed, connecting attached devices with Fibre Channel. Without touching the ongoing controversy whether Fibre Channel or Ethernet is the better choice, and while acknowledging that the payload of Ethernet packages and Fibre Channel frames are almost identical, the basic concept of a SAN-based file system has one clear upside: splitting the metadata from the user data, and therefore allowing access of the user data straight from the storage at high speed rather than going through one single server. The metadata itself still travels through Ethernet and TCP/IP, maybe due to the fact that the Fibre Channel Protocol (FCP) is running over a practically lossless transport and retransmissions of frames is simply not built in. Before the introduction of 16 Gbit FC, there was no forward error correction (FEC), and corrupted frames would simply be discarded or, even worse, could create unpleasant I/O timeouts of 30-60 seconds.

FC has some general advantages over Ethernet in terms of speed, reliability and its dedicated network, isolated from the general network traffic. At the same time, the latter creates the one major downside of FC though, as maintaining a dedicated network is more costly. Also, FC is more expensive when it comes to initial investment. Yet, FC has become the de facto standard for storage connections.

Because FC is based on SCSI, ESCON and HIPPI (who remembers that?), it was only logical to eventually build a file system that was pretty close to the storage’s own transport protocol.

Early adopters of FC-based technologies developed the first clustered file systems, starting in the mid 90’s. One of the first was Mountain Gate with the very first shared file system, Centra Vision File System (CVFS). Mountain Gate got acquired by ADIC in 1999, and the technology eventually became Quantum’s StorNext File System (SNFS). In the late 90’s, IBM’s Spectrum Scale, commonly known as General Parallel File System (GPFS), started as MultiMedia File System, and SGI joined the game with their Clustered Extents File System (CXFS), also known as InfiniteStorage Shared File system. In 2003, an open-source file system called Lustre was born, and the latest player in the field came from the Chinese Academy of Science in 2006, with a project named Blue Whale Clustered File System (BWFS), aka HyperFS, completing the group of five file systems that support FC as basis protocol.

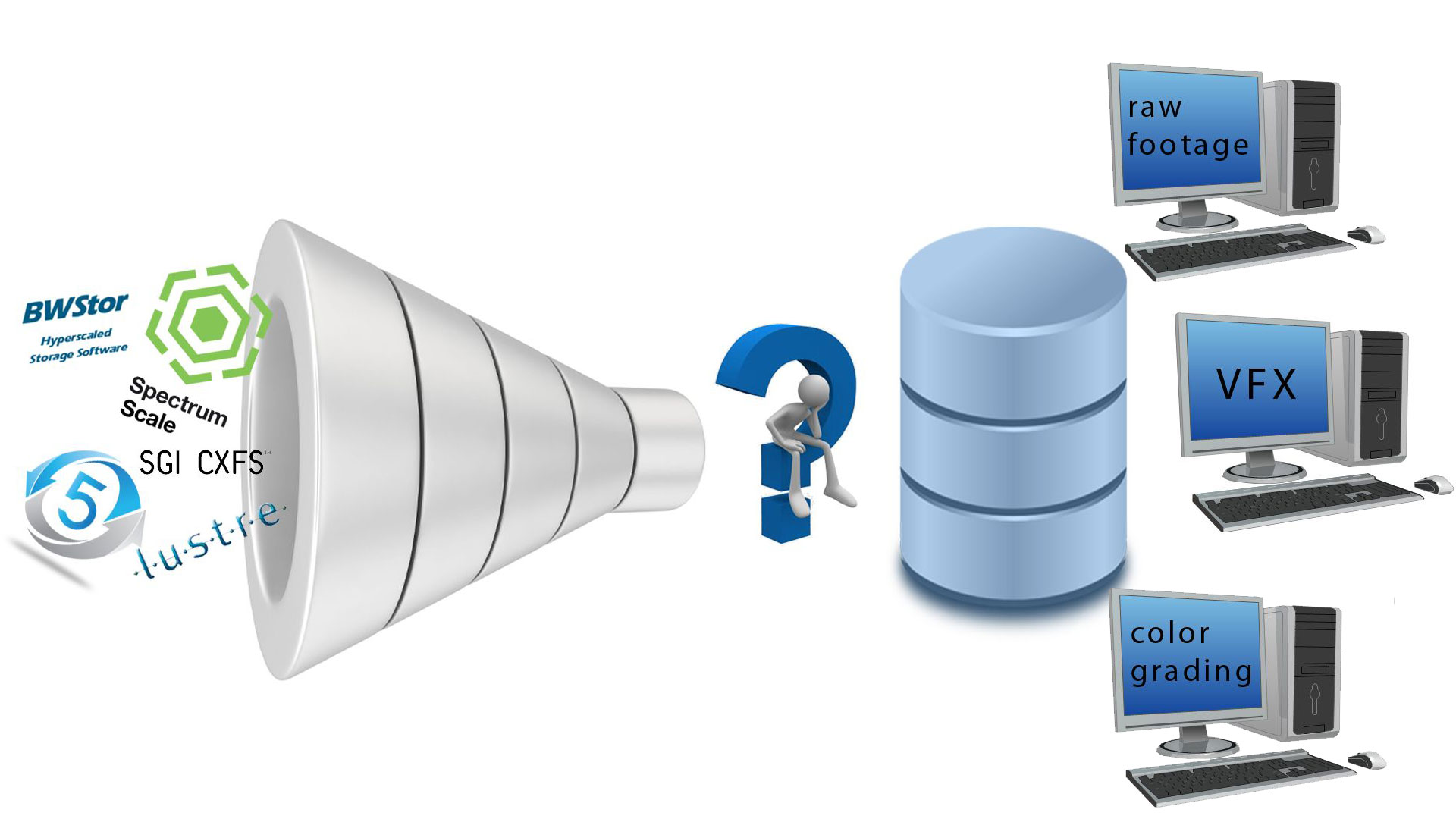

So What Is the Best FC-based File System?

As usual, there’s no straight answer to this question. It depends on your particular situation.

When selecting a FC-based file system, you may want to take several parameters into account, ranging from client support to usability (or rather ease of use). You need to consider how many and which particular clients will need high throughput, like 4K raw footage (~1300 MB/sec) via fibre channel. While all five shared FC-based file systems can deliver very high performance pushing data through the wire, not all of them have the ability to natively support all three major worlds of OS X, Linux and Windows. All those platforms are supported by BWFS, CXFS and SNFS, but bear in mind GPFS has no OS X support and Lustre is merely for UNIX/Linux environments.

The basic file-system design is important. With a rather simple and straightforward structure, BWFS, CXFS and SNFS are lean and comparably easy when it comes to the setup. In a minimal setup, you can start with a single metadata server/controller (MDS/MDC) and a single configuration file for the shared volume, and only need to define one LUN as metadata LUN and another LUN for data. The MDS/MDC is in charge of maintaining the inodes or B-trees where directories and files reside and which attributes they carry.

Lustre FS and GPFS take a different and somewhat more complex approach by managing everything through a database that resides on the disks itself, or through several configuration files. As the learning curve for configuring both Lustre and GPFS is quite steep — there is a good reason why the user manual for GPFS is about 700 pages long — I would recommend getting at least basic training, which will be extremely helpful. Without a good deal of knowledge of file systems in general and how to configure Lustre FS and GPFS in particular, you might end up spending a lot of time learning by doing. Plus, even if you have configured the volume, there are plenty of options to turn knobs, flip switches and optimize it for your individual needs. Unfortunately, there is no golden setting for an optimal file system for all workflows alike, just averages and best practices for the FS block sizes, stripe sizes, buffers and so on. Setting up the perfect file system for your specific workflow will require a lot of testing and tweaking, including starting from scratch to find the best performance for your particular needs.

On the other hand, the perhaps-overwhelming complexity has its advantages. Depending on your workflow, Lustre FS and GPFS can be configured over WAN systems with multiple metadata and quorum servers. This option provides a much higher resistance to failure and therefore a higher uptime. For example, you could share a single namespace across two locations. If the main link between both sites goes dark, the site that has the most quorum servers (at least +1, as quorum nodes should have an odd number) can continue to write content while the other site has only read access. Once the WAN link becomes active again, the data will be redistributed automatically without any manual intervention.

Also, most changes can be done on the fly without bringing the FS down, while the other FC-based file systems have to be stopped and started to re-read the configuration.

So while the initial setup and configuration process might be a little more complicated compared to the other three FC-based file systems, there are upsides. Plus, don’t worry. Many websites will assist you in getting a file system up and running.

The Easy Way vs. Doing It Right

Except for GPFS and Lustre FS, most of the modern file systems come with a convenient GUI that guides a new user through the setup. Wizards make it easy to create your first clustered file system, preventing you from falling into the common pitfalls. However, if you haven’t studied all the manuals from A to Z and are not sure how all the gears work together, you will have a working file system in the end — but not one that’s optimized.

If you really want to get the most and best performance out of your (rather expensive) distributed file system, I encourage you to dig much deeper into the matter than just clinging to the GUI. Remember the ancient saying “Real men don’t click,” and consider using the command-line interface instead. Doing it from scratch will cost more time, yes, but you will learn more and better understand how your file system works, and what options you have for adjusting and optimizing it for your particular workflow.

File Systems in the Post-Production and Broadcast Arena

After spending most of my time in the M&E vertical, I learned that, aside from performance, support for the three major operating systems (OS) is very important. Evidently, this crosses Lustre and GPFS as file systems suitable for M&E pretty quickly off the list, as neither provides native support for OS X. Unlike Lustre, GPFS at least supports Windows clients; however, the installation of a Windows client in GPFS is everything but simple. Plus, it requires the advanced Windows version (at least for official support). While the GPFS driver is kernel-based, the CygWin environment (a Linux-like environment for Windows) has to be installed before the client can mount the GPFS volume. It feels rather clunky, considering that the driver for Windows has been around for about 15 years.

As Windows clients are available from most of them, SNFS and BWFS provide a pretty smooth installer that is easy to configure and mounts your volumes at the end of the installation. Some improvement would be nice for CXFS and, of course, GPFS.

On the OS X site, XSAN is implemented natively since OS X Lion (10.7). While the configuration doesn’t really change between versions, Apple keeps moving the configuration files around for some reason. The only thing you have to do is to add the IP for the MDS and configure the config.plist — once you find it. Search for help on the Internet, where some pages can guide you through the process. BWFS installs the same easy way as SNFS on an OS X and is a similarly lightweight configuration where you only have to touch a few files. A bit more knowledge is required when it comes to the CXFS configuration, as it’s all about fencing of WWPNs.

For all clustered FS vendors, *nix installations are very smooth and straightforward. Though they are really wordy, the manuals are on average 1.5 pages long, and guide you easily and quickly through the installation procedures. In no time, the FS client has been configured and can see the shared volume.

Bottom Line

There really is neither a “bad” file system, nor the ne plus ultra that’s perfect for everyone. Hence, I won’t recommend any particular shared file system. I’ve learned over the last 15+ years in the field that there are three major parameters that determine which file system fits best for a company: the specific workflow requirements, the expectation regarding features and performance, and, last but not least, the available budget.

But to give you at least a little support in your decision-making, I recommend you assess and evaluate your specific needs from every angle. For instance, if your shared media is mainly unstructured data and you’re looking for fast access and high performance, look thoroughly at the applications and client OS that need access. If you are in the field of high-performance computing or VFX, for example, and the clients in your network are without exception *nix based, you can choose pretty much every option available today, including GPFS and Lustre FS. If your network consists of heterogeneous clients, running all three major OS, rather check out the file systems that will support all of them — or you may end up maintaining two or more file systems, and that would really defeat the purpose.

Either way, if you are new to the whole SAN experience, ask around, get training, or hire a professional to assist you. But please do your homework, as I’ve seen very questionable setups — even, unfortunately, from professional vendors. Keep in mind that a wizard-based setup will guide you but can’t make the necessary decisions for the best performance. And make sure you get it right the first time, because once the file system is in use, there is no chance to start over without investing a lot of time (and suffering a lot of pain).

Crafts: Broadcasting Storage

Sections: Technology

Topics: Column/Opinion file systems infrastructure san shared storage

Did you enjoy this article? Sign up to receive the StudioDaily Fix eletter containing the latest stories, including news, videos, interviews, reviews and more.

Leave a Reply