Why the Academy's IIF-ACES Post Process Means Better Color

For more about Kenny’s work on Justified, read F&V’s profile here.

Kenny said he had noticed, when shooting last year with the F35, that certain subtleties of color – like the tobacco-yellow of a certain kind of streetlamp – would go missing between the raw material that he shot and the processed footage he got back from post. “Sony had a tremendous amount of information in their S-Log [gamma function] recording format,” he explains. “By the time we took it to a post house and used their LUT on the material, we ended up losing things that we thought we had.”

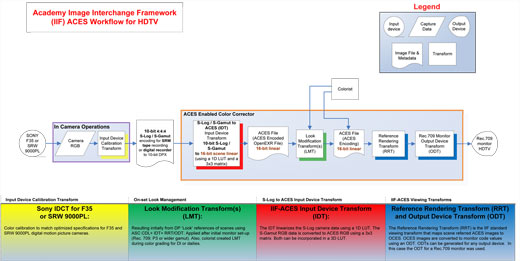

Officially, what was put in place at Encore Hollywood for Justified this season is called the Academy Image Interchange Framework (IIF) ACES Workflow for HDTV. We’ll call it the IIF-ACES workflow for short. IIF is the workflow process, and ACES, which stands for Academy Color Encoding Specification, is the color transform that’s used to eliminate the limitations commonly imposed by HDTV post-production.

“If you have a digital camera that’s capable of recording wide dynamic range like film, but you then do a transform that puts it in a limited color-gamut space like Rec. 709, you’re going to clip highlights,” Clark explains. “All the detail that you know is there suddenly doesn’t appear to be there. So how do you maintain the full potential within a workflow all the way to the finished output?”

Diagram provided by Curtis Clark, ASC; click to download PDF

In a nutshell, the process is pretty simple. Start by imagining an ideal camera that would record all visible colors and boast a dynamic range greater than that of any anticipated capture device in the real world. That imaginary camera would record to OpenEXR files using the ACES color-encoding spec. Real cameras undergo an input device transform (IDT) that converts their data into the ACES RGB values that the ideal camera would have recorded. Because ACES specifies an idealized color space – wider than that of either film or Sony’s S-gamut system – no image quality will be lost in the transform process, no matter which camera captured the footage. As long as your camera is capable of capturing and recording the data, this workflow is designed specifically to preserve it.

Here’s how it works in practice. In this case, the Sony F35 or SRW-9000PL converts the camera RGB image to 10-bit 4:4:4 S-Log/S-Gamut encoding for recording on tape or to 10-bit DPX files. For color-grading, those 10-bit images are transformed via an IDT to 16-bit data in OpenEXR files using half-precision floating-point calculations. In this process, the S-log camera data is linearized (using a one-dimensional LUT) and the S-Gamut RGB data is converted to the ACES RGB color gamut via a 3×3 matrix color transform.

Again, the point is that no image data is lost in this transform to the all-inclusive ACES color space, whether you’re working with footage from a Sony camera, the RED, the Alexa, or some other device. “It’s a bigger bucket,” says Clark. “The color-encoding spec for images input into ACES would be a common denominator for everyone.” The colorist then applies a series of look-modification transforms, including any look references from the DP, and goes to work grading the pictures in the 16-bit linear ACES-encoded OpenEXR file.

Those ACES images can’t be used for final image evaluation – they tend to be flat and very low-contrast – though they are adequate for checking some aspects of an image. Instead, a reference rendering transform and a particular output device transform (ODT) produce a viewable image on a selected output device. For example, the use of a Rec. 709 ODT will allow a colorist to view images on an HDTV monitor. The same ODT is used to make the final deliverable HD tape.

For Justified, which is colored on an Autodesk Lustre at Encore Hollywood, there’s one more tweak – the data in the 16-bit linearized ACES-encoded file is “quickly re-encoded into a log format,” Clark says, so that Lustre’s log toolset can be used for grading rather than its more video-centric (i.e., lift-gain-gamma) linear adjustments, which aren’t appropriate for the wide-gamut ACES data. The data is re-linearized after grading takes place.

“Everyone immediately sees the difference” when footage is graded using the new workflow, Clark says. “It’s not nuanced detail. It’s significant. That’s why the excitement is there.”

It’s important to remember that the IIF-ACES workflow is in no way television-specific. For a film project, the ODT would map the colors to film-printing densities for a film-out that correctly matched the P3 color space of a master grade for digital cinema. It’s not limited to footage captured by physical cameras, either. The ACES spec has applications for VFX work, where images are rendered out by virtual cameras. An IDT can transform that image data into a 16-bit ACES-encoded file alongside film or digital footage captured for the same production.

In fact, Clark says, the workflow has its roots in VFX. “It all started about five years ago, as an effort to integrate visual effects more effectively with scanned film in a DI environment,” he says. “You’re talking about OpenEXR vs. 10-bit DPX, so already there’s a mismatch between the two that has to be handled in the final compositing. This system harmonizes those two things perfectly, because everyone is working in the same color space, with the same file formats, and with the same linearized data. Also, with the 10-bit limitation of DPX, the bit-depth of these cameras and film scans with greater than 10 bits is not really coming through properly. There are limitations all the way around that the IIF-ACES workflow addresses.”

And Kenny, for his part, couldn’t be much happier with the improvement in his own pictures. “This stuff truly is going to make a difference,” he says.

Sections: Creativity Technology

Topics: Feature Project/Case study

Did you enjoy this article? Sign up to receive the StudioDaily Fix eletter containing the latest stories, including news, videos, interviews, reviews and more.

Leave a Reply