Scale-out storage makes it easier to scale performance or capacity to support crunch time deadlines in production, but it can also introduce data management complexity and performance bottlenecks that can slow the entire operation.

Storage problems are hard to diagnose in scale-out architectures, because poor visibility makes it hard to locate the source of issues. When applications slow down, determining whether hotspots are on the server (compute), network, caches, or storage takes investigation, and few studios can afford the time or cost of a trial-and-error hunt for the root cause of the problem.

Once admins locate problem applications, it can also be quite a challenge to get past short term fixes to resolve the issues for good. IT can save a lot of time and trouble by choosing software to scale out storage with the ability to easily spot and solve problems using advanced data management capabilities, and then avoid them altogether in the future. The following list summarizes key features to look for when looking into scale-out storage solutions:

- Works with both existing and new systems. Few VFX studios can afford to rip and replace their existing systems. This makes software that works with existing technologies a valuable – and essential – investment. At the same time, technology is evolving so rapidly that it is important for the software be vendor agnostic, and work with industry standard storage protocols, so that studios can quickly adopt new technologies as they emerge.

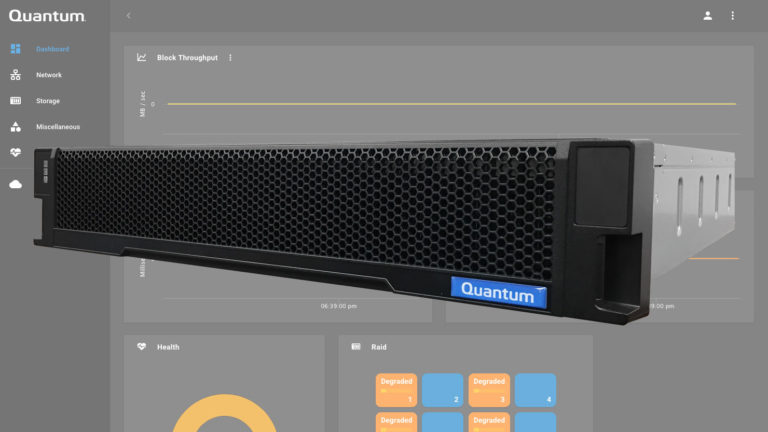

- Real-time visibility into workloads. Storage is frequently the underlying issue of application performance problems. When choosing a scale-out storage solution, it’s important that the system provide real-time visibility into storage workloads through software, as well as the applications generating these workloads. Getting the right information quickly gives IT the ability to manage data proactively, before business is disrupted.

- Historical workload modeling. Enabling administrators to see workloads in historical context helps them make decisions based on whether a workload spike is a one-time or recurring event. This helps IT to better understand the datacenter environment and make more informed decisions regarding both manual and policy-based remediation.

- Global data management. It’s impractical to expect IT to separately manage application servers and the multiple storage types that support them. Software should make application and storage workload data visible and manageable from a single interface.

- Granular data management. It’s common for scale-out systems to distribute load at the client level, but this coarse and static load distribution makes remediating problems difficult. Moving the workload of an entire client from one storage device to another doesn’t give admins the control they need to manage data effectively. It’s entirely possible that moving client data to another storage device will simply move the hot spot to the new storage node. The ideal solution should enable administrators to distribute workloads granularly, past the client level and down to the file level.

- Application-centric policy engine. IT should be able to manage data by specifying the performance and protection an application requires, rather than estimating what infrastructure they need to support these requirements. Smart scale-out software should allow IT to enter business data needs as input, and automatically align those requirements with the optimal resource to meet those needs.

- Transparent, automated problem remediation. Once policies are applied, data should be able to automatically move to the optimal resource to meet the new or changing requirements, without impacting applications. Data should move intelligently, using knowledge of current infrastructure activity to ensure that one hotspot isn’t just replaced with another. The value of this non-disruptive, automated data mobility is immense, as it saves IT the need to plan and perform complex and expensive migrations, while eliminating business disruption.

- Smart policy management. While granular data management gives IT the control necessary to effectively address workload distribution, managing policies would be difficult if every single policy had to be manually managed across petabytes of data. To simplify policy management, IT should be able to automate policy assignment, according to data requirements, while still having manual control when desired. For example, admins should be able to have software automatically assign different policies to different file types (such as, .log, .tmp, .exr or .tiff), or based on file activity or inactivity (for example, automatically move any file that has not been accessed in the last 30 days to object/cloud storage).

- Automate Data Lifecycle Management (DLM). In addition to automating remediation for unplanned events, your scale-out software should support a planned workflow that automates the movement of data throughout its expected lifecycle. For example, files could be ingested initially on high bandwidth storage, moved to compositor workstations during compositing, and back to high bandwidth storage for rapid streaming during the editing process. Once the project is complete, the solution should automatically detect which files aren’t being accessed and move them to archival storage. This automates janitorial work for IT, while freeing high-end resources for other projects much faster, slowing storage sprawl and making it possible for studios to take on additional projects without purchasing additional infrastructure.

Data management can be made easy with software that delivers comprehensive visibility into application’s data access and storage use, while automating the data lifecycle and remediation of hot spots, non-disruptively, across any storage customers choose to deploy. When you’re in the market to simplify data management and scale production, check this list to make sure you’re considering all the variables before making your next investment.

Did you enjoy this article? Sign up to receive the StudioDaily Fix eletter containing the latest stories, including news, videos, interviews, reviews and more.

Leave a Reply